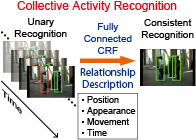

We propose a novel method for consistent collective activity recognition in video images. Collective activities are activities performed by multiple persons, such as queuing in a line, talking together, and waiting at an intersection. Since it is often difficult to differentiate between these activities using the appearance of only an individual person, the models proposed in recent studies exploit the contextual information of other people nearby. However, these models do not sufficiently consider the spatial and temporal consistency in a group (e.g., they consider the consistency in only the adjacent area), and therefore, they cannot effectively deal with temporary misclassification or simultaneously consider multiple collective activities in a scene. To overcome this drawback, this paper describes a method to integrate the individual recognition results via fully connected conditional random fields (CRFs), which consider all the interactions among the people in a video clip and alter the interaction strength in accordance with the degree of their similarity. Unlike previous methods that restrict the interactions among the people heuristically (e.g., within a constant area), our method describes the “multi-scale” interactions in various features, i.e., position, size, motion, and time sequence, in order to allow various types, sizes, and shapes of groups to be treated. Experimental results on two challenging video datasets indicate that our model outperforms not only other graph topologies but also state-of-the art models.

Publications

Takuhiro Kaneko, Masamichi Shimosaka, Shigeyuki Odashima, Rui Fukui, and Tomomasa Sato.

A fully connected model for consistent collective activity recognition in videos.

Pattern Recognition Letters, Vol. 43, pp. 109-118, 2014. [audible slides]

Takuhiro Kaneko, Masamichi Shimosaka, Shigeyuki Odashima, Rui Fukui, and Tomomasa Sato.

Consistent collective activity recognition with fully connected CRFs.

Proceedings of the 21st International Conference on Pattern Recognition (ICPR 2012), pp. 2792-2795, Tsukuba, November 2012.

ICPR2012 Best Student Paper Awards (Photo)

Takuhiro Kaneko, Masamichi Shimosaka, Shigeyuki Odashima, Rui Fukui, and Tomomasa Sato.

Viewpoint invariant collective activity recognition with relative action context.

In ECCV 2012 Proceedings (Part III), Lecture Notes in Computer Science, vol. 7585, pp. 253–262, Florence, Italy, October 2012, Springer Berlin Heidelberg.